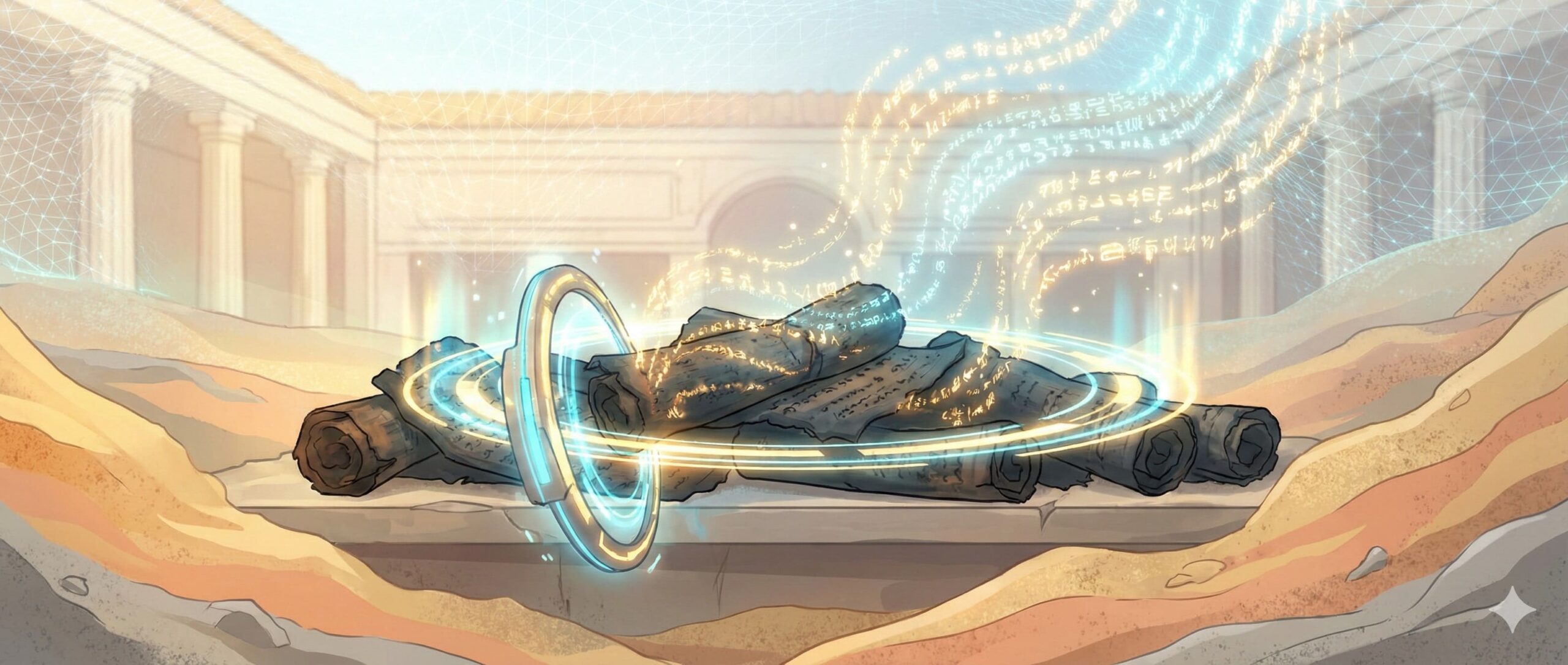

AI reading ancient scrolls was once thought impossible. In 79 AD, Mount Vesuvius erupted, burying the Roman cities of Pompeii and Herculaneum under millions of tons of volcanic ash. While Pompeii was destroyed by rock, Herculaneum was hit by a superheated pyroclastic flow, a wave of gas so hot it instantly carbonized organic matter.

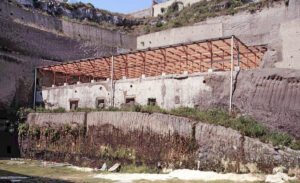

In the 1750s, workers digging a well accidentally discovered a luxurious villa (The Villa of the Papyri). Inside, they found the only surviving library from antiquity: 1,800 scrolls.

The Problem:

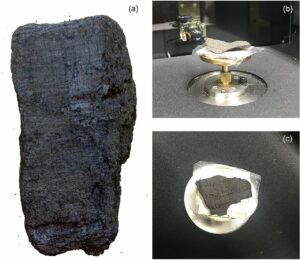

They weren’t scrolls anymore. The heat had turned them into lumps of charcoal. They are so fragile that if you touch them, they turn to dust. Early attempts to open them destroyed them. For 250 years, they sat in a museum in Naples; a time capsule of human knowledge that we could hold, but could not read.

Papyrus fragments PHerc.1103 (a) and PHerc.110 (b,c). Image contrast and brightness were enhanced to better visualize the details visible to the naked eye on their external surface.

Historians call this the greatest tragedy of classical archaeology. We know the “Source Code” of Western civilization (Epicurean philosophy, lost works of Sappho or Aristotle) is locked inside these lumps of coal, but the “file format” was corrupted by a volcano.

Until 2023. A team of students, led by a computer science professor and a former tech CEO, used AI to do what physics said was impossible: Read a book without opening it.

The “Carbon-on-Carbon” Problem in Ancient Scrolls

Why couldn’t we just X-ray them? We use X-rays to read folded letters all the time.

The answer lies in chemistry.

Ancient Roman ink was made of Charcoal (Carbon).

The Papyrus paper is made of Plants (Carbon).

When you blast the scroll with X-rays, the ink and the paper have the exact same density. To an X-ray machine, the page is invisible. It’s like trying to read black text printed on black paper in a dark room.

Two scrolls were scanned using X-ray CT at a resolution of 8 um! Each file is roughly 5 TB of data, while each scroll might contain just 10,000 words. (Please read more at: https://caseyhandmer.wordpress.com/2023/08/05/reading-ancient-scrolls/)

The “Virtual Unwrapping”

Dr. Brent Seales from the University of Kentucky solved the first half of the puzzle. He used a Particle Accelerator (Synchrotron) to scan the scrolls at an incredibly high resolution. He then wrote code to virtually “unroll” the 3D scan into a flat 2D sheet.

But the sheet was still blank. The scan worked, but the ink was chemically invisible.

The “Crackle” Discovery

In mid-2023, a physicist named Casey Handmer stared at these high-res scans for hours. He noticed a strange pattern. The ink didn’t show up as a color, but it modified the texture of the papyrus fibers. Where the ink sat, the fibers had a microscopic “crackle” pattern like dried mud.

The eye could barely see it. But if the human eye can see a faint pattern, an AI can see a clear one.

The Ink Detection Model

The Vesuvius Challenge: Teaching AI to Read Burned Text

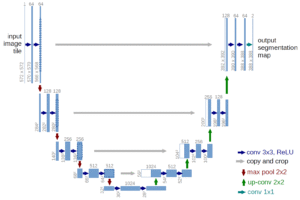

The Vesuvius Challenge was launched to crowd-source the solution. The winners (Youssef Nader, Luke Farritor, and Julian Schilliger) didn’t use a Large Language Model. They used Computer Vision, specifically a TimeSformer (not a Transformer) and a U-Net.

How it works:

U-Net: Convolutional Networks for Biomedical Image Segmentation

3D Input:

The AI takes a small chunk of the 3D X-ray scan (a “voxel” cube).

Texture Analysis:

It analyzes the topography of the papyrus fibers in 3D space. It isn’t looking for “darkness” (ink color); it is looking for “disruption” (fiber shape).

Segmentation:

The U-Net (an architecture usually used to find tumors in MRI scans) paints a probability map. “There is an 85% chance this pixel contains the ‘crackle’ texture.”

The AI effectively “hallucinated” the ink back into existence based on the scars it left on the paper 2,000 years ago.

The models use small input/output windows. In some cases, the output is even only binary (“ink” vs “no ink”), as shown in these pictures. This makes it extremely unlikely for the model to hallucinate shapes that look like letters.

The Result:

In October 2023, the model spit out a clear, Greek word: “πορφύρας” (Porphyras). It means “Purple.” It was the first word read from the library in two millennia.

Why It Matters: The Lost Library

This is distinct from the other AI breakthroughs we have discussed. AlphaFold predicts the future (proteins). Stock models predict the future (prices). The Vesuvius AI predicts the past.

We have recovered only a tiny fraction of the ancient world’s literature. Entire works vanished, including the plays of Sophocles, the mathematics of Archimedes, and the early histories of Rome. For centuries, scholars believed these texts were gone forever.

Unlike predictive models, AI reading ancient scrolls allows us to reconstruct the past by revealing text that survived only as charcoal.

The Vesuvius Challenge proved that the 1,800 scrolls in Naples are readable. But more importantly, it unlocks “Archaeological AI.” We can now potentially read the “Papyrus wrappers” used in Egyptian mummies (which were often made of recycled scrap paper containing lost poems).

The Risk:

The only risk here is interpretative. The AI produces a grayscale image of letters. It is still up to human Papyrologists to translate them. If the model is “over-tuned,” it might accidentally turn a random crack in the papyrus into a Greek letter “Alpha,” changing the meaning of a philosophical text. We must trust the texture, not just the prediction.

What does this signify?

When we talk about AI, we usually talk about “intelligence”: agents that can think or act. But this application highlights a different power of AI: Perception.

The AI saw something that was physically impossible for a human to see. It looked at a featureless black void and saw the texture of purple dye. This story signifies human foresight as well as resilience in the face of overwhelming odds.

In 79 AD, a volcano tried to delete a library. In 2023, three students and a neural network hit “Undo.

This marks the birth of archaeological AI—where machines don’t imagine history, they perceive it.