Neuromorphic Computing Explained: Brain-Inspired Chips Powering the Future of AI

Neuromorphic computing represents a radical departure from how computers have worked for over 70 years. Often described as brain-inspired computing, this approach moves away from rigid, energy-hungry silicon architectures and toward systems that function more like the human brain. By mimicking biological neurons and synapses in hardware, neuromorphic computing promises ultra-low power consumption, real-time learning, and massively parallel processing—capabilities traditional computers struggle to achieve.

1. What Is Neuromorphic Computing?

At its core, neuromorphic computing is the design of computer hardware that directly mimics the physical structure and behavior of the human nervous system.

Unlike conventional computers—where a CPU continuously fetches data from a separate memory unit—neuromorphic chips tightly integrate processing and memory. Artificial neurons perform computation, while artificial synapses store and adapt information. This architecture mirrors the human brain, which contains roughly 86 billion neurons connected by trillions of synapses.

The Biological Blueprint

- Neurons: Artificial neurons remain idle until they receive sufficient input, at which point they “fire” or “spike.”

- Synapses: These connections are plastic, meaning they strengthen or weaken based on activity, enabling learning directly in hardware.

- Spiking Neural Networks (SNNs): Instead of continuous numerical calculations, neuromorphic systems use discrete electrical spikes—closely resembling how biological brains transmit signals.

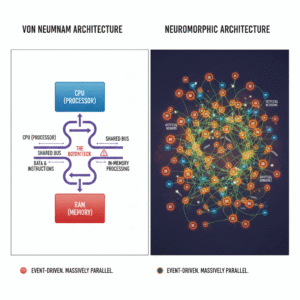

2. Why Do We Need Neuromorphic Computing? The Von Neumann Bottleneck

Traditional computers rely on the Von Neumann architecture, which separates processing and memory. As AI workloads grow, this separation creates a severe performance and energy bottleneck. Data must constantly move between memory and processor—wasting time and power.

This inefficiency is why laptops overheat and why battery-powered AI devices struggle to operate continuously.

Traditional vs. Neuromorphic Computing

| Feature | Traditional (Von Neumann) | Neuromorphic (Brain-Like) |

|---|---|---|

| Data movement | Massive (bottlenecked) | Minimal (in-memory) |

| Power usage | High, always on | Ultra-low, event-driven |

| Processing | Sequential | Massively parallel |

| Adaptability | Fixed logic | Learns on the fly |

Neuromorphic computing eliminates this bottleneck by processing data exactly where it is stored.

3. Key Advantages of Neuromorphic Computing

Extreme Energy Efficiency

The human brain operates on roughly 20 watts—less than a household lightbulb. Neuromorphic chips such as Intel Loihi can be up to 1,000× more energy-efficient than traditional processors for specific AI workloads because they consume power only when neurons spike.

Real-Time Learning

Conventional AI models require offline training on massive data centers. In contrast, neuromorphic systems continuously adjust synaptic weights during operation, allowing them to adapt instantly to new environments.

Ultra-Low Latency

Because computation happens directly in memory, neuromorphic chips can make split-second decisions. This capability is critical for autonomous drones, robotics, and self-driving vehicles where reaction time is everything.

4. Major Neuromorphic Chips and Players (2024–2025)

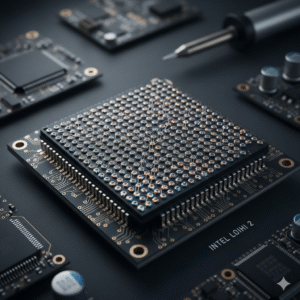

Intel Loihi 2

A research-focused neuromorphic processor supporting millions of neurons. It is widely used for optimization tasks such as robotic control and real‑time sensory processing.

IBM NorthPole

A hybrid architecture that blends traditional and neuromorphic principles. Designed for high‑speed, energy‑efficient image recognition and inference.

Intel Hala Point

Announced in 2024, Hala Point is the world’s largest neuromorphic system, containing 1.15 billion artificial neurons—roughly comparable to the brain of an owl.

BrainChip Akida

One of the first commercially available neuromorphic processors. Akida is already deployed in edge AI devices such as smart sensors, wearables, and medical monitoring systems.

5. Real-World Applications of Neuromorphic Computing

Robotics

Neuromorphic chips enable robots to develop proprioception—an internal sense of body position—allowing autonomous movement without constant cloud connectivity.

Prosthetics

Smart prosthetic limbs can learn a user’s gait and movement patterns in real time, creating more natural and responsive control.

Edge AI

Always‑on sensors can detect specific sounds or signals—such as glass breaking or cardiac irregularities—while consuming almost no battery power.

Scientific Simulation

Neuromorphic systems can model biological processes or climate dynamics with far less energy than traditional supercomputers.

6. Challenges Facing Neuromorphic Computing

Despite its promise, neuromorphic computing is not yet mainstream due to several challenges:

- Programming complexity: There is no standard software ecosystem equivalent to Python or Windows for spiking neural networks.

- Manufacturing limitations: Producing memristors—the hardware components that act like synapses—at scale remains difficult.

- Precision trade‑offs: Traditional processors still outperform neuromorphic chips in tasks requiring exact numerical accuracy.

Summary: The Future of Brain-Inspired Computing

Neuromorphic computing is not designed to replace your laptop or smartphone. Instead, it brings intelligence to the edge—to devices like drones, sensors, robots, and prosthetics—by making AI as efficient and adaptive as the biology it seeks to emulate.

As energy efficiency and real‑time learning become critical to the future of AI, neuromorphic computing stands out as one of the most promising paths forward.

Know more about relevant topics:

How F1 Teams Win Before the Race Starts: