AI weather forecasting has long been limited by chaos. In 1961, an MIT meteorologist named Edward Lorenz was running a simulation of weather patterns on a primitive computer. He wanted to see a sequence again, so he re-entered the numbers from a printout. However, to save time, he rounded the initial data from .506127 to .506. He assumed a difference of one part in a thousand (less than the puff of a breeze) would be inconsequential.

The Result:

He went to get a coffee, and when he returned, the simulation had diverged completely. The weather was unrecognizable. The tiny rounding error had cascaded into a completely different future. Lorenz had accidentally discovered Chaos Theory. He famously asked:

“Does the flap of a butterfly’s wings in Brazil set off a tornado in Texas?”

Left: Edward Norton Lorenz

Right: A sample solution in the Lorenz attractor

For the last 60 years, this “Butterfly Effect” has been the hard limit of human foresight. It is why, despite supercomputers the size of warehouses, your weather app still lies to you about rain next Tuesday.

Physics gave us the laws to understand the weather, but Chaos forbade us from predicting it. Until late 2023, when Google DeepMind released GraphCast, an AI that didn’t just beat the best supercomputers in the world, it humiliated them. It turned a problem that required millions of dollars of compute into something you can run on a laptop in 60 seconds.

Here is how AI transitioned from folding proteins and predicting stocks to taming the wind itself.

The Old Way: The Resolution Wall

To understand the magnitude of the shift, we must understand how we currently predict the future. For decades, we have used a method called Numerical Weather Prediction (NWP).

Imagine dividing the Earth’s atmosphere into a giant 3D grid—millions of little boxes stacked on top of each other. In each box, we measure temperature, pressure, wind speed, and humidity. Then, we use the Navier-Stokes equations (complex fluid dynamics calculus) to simulate how the air in Box A will push the air in Box B.

The Problems:

- Computational Cost: To predict 10 days of weather, the world’s best system (the European ECMWF) runs on a supercomputer with nearly 1 million processor cores. It takes hours to crunch the numbers.

- The Resolution Wall: We can’t make the boxes infinitely small. Currently, the boxes are about 9km wide. But clouds, turbulence, and thunderstorms are often smaller than 9km. They slip through the cracks of the simulation, introducing errors that multiply exponentially.

The New Way: Graph Neural Networks (GNNs)

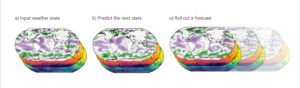

Just like AlphaFold ignored the physics of atomic bonds to focus on evolutionary history, GraphCast ignores the laws of fluid dynamics to focus on historical data. It doesn’t “solve” the equations. It looks at the “frames” of the weather from the last 6 hours and predicts the next frame.

Standard images use 2D grids (pixels), but the Earth is a sphere. If you try to put a flat grid on a round planet, you get distortion at the poles. GraphCast solves this by using a Graph Neural Network. It treats the atmosphere as a “Mesh” of nodes (interconnected points), similar to a geodesic dome. A node over London “messages” the node over Paris about the wind heading its way.

For inputs, GraphCast requires just two sets of data: the state of the weather 6 hours ago, and the current state of the weather. The model then predicts the weather 6 hours in the future. This process can then be rolled forward in 6-hour increments to provide state-of-the-art forecasts up to 10 days in advance. (https://deepmind.google/blog/graphcast-ai-model-for-faster-and-more-accurate-global-weather-forecasting/)

The Impact: Democratizing Safety

The release of GraphCast in Science (2023) sent shockwaves through meteorology, not just because it was accurate, but because it was fast.

- Efficiency:

Old Way: ~1 Hour on a Supercomputer with thousands of nodes.

GraphCast: 60 Seconds on a single Google TPU chip.

This is an energy efficiency improvement of roughly 1,000x. Because it can run on a modest consumer GPU, it allows developing nations to have state-of-the-art weather prediction without needing a billion-dollar supercomputer. A laptop in a village in Bangladesh can now predict cyclones with the same accuracy as a lab in Switzerland.

- Real World Proof (Hurricane Lee):

In September 2023, Hurricane Lee was churning in the Atlantic. Standard models struggled to predict where it would land, oscillating between Boston and Maine. GraphCast predicted, three days in advance, that it would make landfall in Nova Scotia. The traditional models didn’t converge on that location until 24 hours later. In the world of disaster response, that 24-hour head start is the difference between life and death. MIT Technological Review Article

- Accuracy:

DeepMind compared GraphCast against the ECMWF’s “HRES” system (the gold standard of weather). GraphCast was more accurate on 90% of the 1,380 targets tested (temperature, pressure, wind speed at various altitudes).

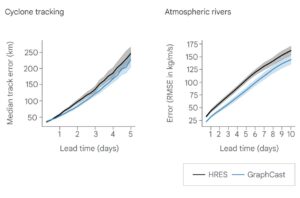

Severe-event prediction – how GraphCast and HRES compare.

Left: Cyclone tracking performances. As the lead time for predicting cyclone movements grows, GraphCast maintains greater accuracy than HRES.

Right: Atmospheric river prediction. GraphCast’s prediction errors are markedly lower than HRES’s for the entirety of their 10-day predictions. (https://deepmind.google/blog/graphcast-ai-model-for-faster-and-more-accurate-global-weather-forecasting/)

The Risks: The Problem of “Why?”

As with Jim Simons’ Medallion Fund, we run into the “Black Box” problem. The Old Way (NWP) was interpretable. If the model said it would rain, we could trace the math and see why (e.g., “The pressure gradient force exceeded X”). GraphCast gives us the answer, but not the reasoning. It says “Hurricane in Nova Scotia,” but it cannot explain the physics behind it.

This creates a fragility known as Generalization in a Changing Climate.

The Stationarity Problem (Again)

Remember the “Non-Stationarity” issue from stock markets? Financial models fail because market rules change. Weather models face a similar threat: Climate Change.

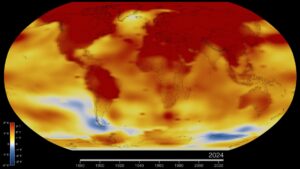

GraphCast was trained on data from 1979–2017. But the climate of 2025 is fundamentally different from 1980. The oceans are hotter, the jet streams are wobbly. If the physics of the Earth shifts too much, the patterns AI learned might become obsolete. An AI trained on history might fail to predict “Black Swan” climate events that have never happened before.

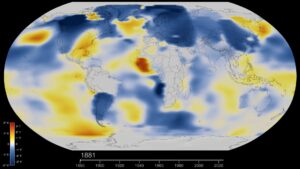

These color-coded maps show changing global surface temperature anomalies. Normal temperatures are shown in white, higher than normal temperatures in red and lower than normal temperatures in blue. Normal temperatures are calculated for years 1881 and 2024. The second image represents global temperature anomalies in 2024. Download this visualization from NASA Goddard’s Scientific Visualization Studio: https://svs.gsfc.nasa.gov/5450

There is also the risk of complacency. If we rely entirely on AI to intuit the weather, we might stop teaching humans the physics of why the weather happens. We risk becoming a civilization that knows what will happen, but has forgotten how the world works.

Significance of GraphCast

We have not “solved” chaos. The Butterfly Effect is a fundamental law of the universe, not a bug in our code. We cannot predict the weather 30 days out, the chaos is too great.

However, AI has pushed the boundary of the “fog of war.” Just as AlphaFold handed us the source code of biology, GraphCast has given us a looking glass into the immediate future of our planet.

The significance of this cannot be overstated. Weather is the operating system of our planet. It dictates our agriculture, our shipping, our energy grids, and our safety. By moving from calculation to pattern recognition, we have effectively given every person on Earth a supercomputer in their pocket.

For centuries, we looked at the sky and prayed to the gods for rain. Then, we built machines to calculate the rain. Now, we have built intelligences that simply “intuit” the rain. We are no longer just calculating the motion of heavenly bodies; we are finally beginning to calculate the madness of the wind.

Know more about related topics:

AI-Powered Cybersecurity: The Double-Edged Sword of Modern Defense:

AI-Powered Cybersecurity: The Double-Edged Sword of Modern Defense

From Vision to Velocity: The CodeSmiths 7-Step Engineering Lifecycle:

From Vision to Velocity: The CodeSmiths 7-Step Engineering Lifecycle