The End of Subtitles: How AI “Visual Dubbing” Broke the Language Barrier

AI Visual Dubbing is ending one of global cinema’s oldest compromises. We have all been there. You sit down to watch a global masterpiece like Squid Game or Dark, but you are immediately faced with a frustrating compromise.

You either spend the entire movie staring at the bottom of the screen, reading subtitles while missing the nuance in the actor’s eyes, or you switch to the dubbed version and suffer the “Dubbing Disconnect.”

The mouth shapes an “O,” but the voice says “E.” The lips stop moving, but the sentence keeps going. For over a century, this visual mismatch was the “tax” we paid for global cinema. It was a friction point that severed the emotional connection the moment the audio fell out of sync with reality.

But in late 2025, that tax was abolished.

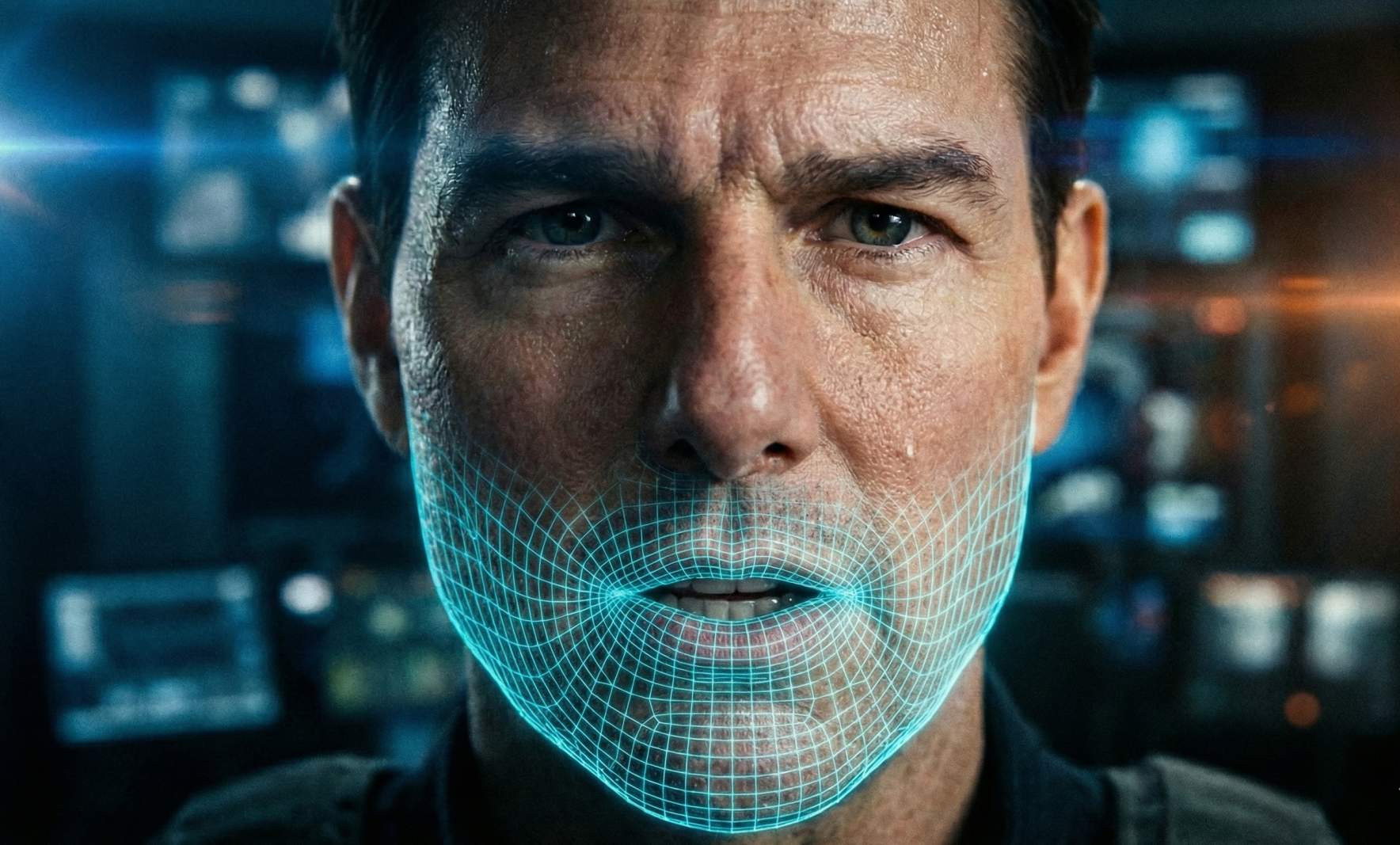

We have entered an era where Tom Cruise can speak fluent Hindi, and the AI will physically morph his lips to match the new language perfectly. It doesn’t look like a dub, it looks like he spoke it natively.

We are no longer just translating words, we are translating the actor themselves.

Industry insiders call this “Visual Dubbing.” This global breakthrough didn’t start with a grand plan to conquer world cinema. It started with a much smaller, sharper problem: a low-budget movie trying to save itself from the censors.

A Director, an R-Rating, and an Impossible Choice

To understand why this technology exists, you have to look at its unlikely origin. It wasn’t invented by a trillion-dollar R&D lab like Google or OpenAI. It was pioneered by a film director named Scott Mann.

Director Scott Mann (left) at the Flawless AI headquarters

In 2022, Mann directed a survival thriller called Fall. The movie was finished, but the studio delivered devastating news: the film was rated ‘R’ because the characters used the “F-bomb” over 30 times. The studio demanded a PG-13 (Parental Guidance 13) cut.

For a movie relying on a teen audience, an R-rating was a death sentence. It meant anyone under 17 would be banned from entering without a parent. Since survival thrillers rely heavily on teenage viewers, this rating would effectively lock out the film’s biggest paying audience.

The “Impossible” Constraint

Mann faced a financial wall. Bringing the actors back to the desert location, rehiring the crew, and setting up the stunts for physical reshoots would cost millions of dollars that the production simply didn’t have.

A Bad Dub

Traditionally, his only cheap option was ADR (Automated Dialogue Replacement). He could dub the word “freaking” over “f***ing”. The actors’ mouths would clearly be shaping the wrong word, turning a tense thriller into a bad lip-reading comedy.

The Innovation

Refusing to compromise the film, Mann looked outside Hollywood. He discovered emerging research on neural rendering and teamed up with scientists to apply it to cinema.

Instead of a physical reshoot, they used this early AI code to “rewrite” the pixels of the actors’ mouths. It worked. The AI successfully grafted a “PG-13 mouth” onto an “R-rated performance” for a fraction of the cost of a reshoot.

The movie was saved, but Mann realized he had stumbled onto something much bigger. He turned this solution into a company, Flawless AI, birthing the industry of Visual Dubbing.

The “Geometry” of Dubbing

To appreciate what Scott Mann and his team achieved, we first have to understand the immense challenge that dubbing artists have faced for a century.

Traditional dubbing is an art form. Translators and voice actors perform miracles trying to fit complex foreign dialogue into the rigid mouth movements of the original actor.

Translators had to obsess over “Labials” (sounds that require lips to touch (like B, M, and P)). If the actor on screen closed their mouth to say “Mom,” but the foreign word didn’t have a lip closure, the illusion broke. To fix this, translators often had to change the meaning of the script just to match the timing of the jaw. The script was forced to compromise for the sake of the visual.

How Flawless AI Changed the Equation

Mann’s company, Flawless AI, realized that trying to force the script to match the video was the wrong approach. So, they built a proprietary engine called TrueSync to do the opposite: force the video to match the script.

They achieved this using a sophisticated 3-Step Neural Pipeline that respects the original performance while altering the physics.

Step 1: The Audio Anatomy (Phonemes)

The TrueSync engine ignores the words and listens to the sound. Whether it’s an actor dubbing a PG-13 line or a voice artist speaking Japanese, the AI analyzes the audio track and breaks it down into Phonemes, the smallest units of sound (For example, to the AI, the word “Cat” isn’t one word; it is three specific sounds: “K”, “Ah”, and “T”).

Step 2: The Visual Dictionary (Visemes)

The AI then translates sound into geometry. Every Phoneme has a corresponding facial shape called a Viseme.

If the audio has a “B” sound, the Viseme is “Lips Pressed Together.” The AI creates a frame-by-frame blueprint: “At Frame 104, the new audio requires a ‘Closed Lip’ Viseme.”

Step 3: Neural Rendering

The engine builds a 3D mesh of the actor’s jaw and cheeks in real-time.

The Graft: It generates entirely new pixels for the mouth area. Crucially, it clones the specific lighting, skin texture, and film grain of the original shot. It then blends this new “Japanese-speaking mouth” onto the original actor’s face so seamlessly that the human eye cannot detect the seam.

The Real-World Impact: A New “Premium” Tier

Does this mean traditional dubbing is dead? Not immediately.

Traditional audio dubbing remains the fast, cost-effective standard for quick TV releases. However, Visual Dubbing creates a new “Premium Tier” for global blockbusters.

While running neural renders is more expensive than simply recording audio in a booth, the value it unlocks is exponential.

Data shows that US audiences notoriously avoid dubbed/subtitled content. By spending a bit more on Visual Dubbing to make the lip-sync perfect, studios can effectively turn a local French or Indian movie into a “native” English blockbuster, unlocking the massive US box office that usually ignores foreign films.

The SAG-AFTRA Strike (2023)

Actors fill the streets during the historic 118-day strike in 2023

SAG-AFTRA is the massive labor union representing 160,000+ Hollywood actors. In 2023, they went on a historic 118-day strike, refusing to work until studios promised that AI would not be used to clone their voices or faces without payment.

Actors feared “Performance Theft.” If AI can change my mouth, can it change my words? Can a studio make an actor say a political slogan they never agreed to?

The “Digital Replica” Clause

The strike resulted in a landmark agreement that defined the rules of the road for 2025 and beyond. Studios must obtain specific permission to use AI to alter a performance. Actors must be paid for the “Digital Replica” usage.

Now, actors like Tom Cruise or Shah Rukh Khan can officially license their “Global Lip Sync,” monetizing their performance across languages while protecting their rights.

This isn’t hypothetical. We are already seeing the first wave of “AI Licensing.”

We saw the first glimpse of this model when the legendary James Earl Jones retired from the role of Darth Vader. Instead of recasting him, he signed a deal allowing an AI startup (Respeecher) to generate new dialogue using his archival voice data for the Obi-Wan Kenobi series. He protected his legacy and got paid, without ever stepping into a recording booth.

The Swedish sci-fi hit Watch the Skies became the first feature film to release in US theaters with fully AI-altered lip sync, where the original actors consented to performing in English via code.

Industry leaders and government officials at the WAVES Summit 2025

In India, VFX giant DNEG, the studio behind Oscar-winning spectacles like Dune and Inception, confirmed that the upcoming blockbuster Ramayana will utilize this tech to make stars like Ranbir Kapoor appear to speak fluent Japanese and Spanish, aiming for a ‘Marvel-level’ global release.

The End of “Foreign” Film

As we look toward 2026 and beyond, Visual Dubbing promises to do something that no treaty or trade deal ever could: dismantle the language barrier.

For the last century, “Foreign Language” was a genre. It was a gated garden, accessible only to those willing to read subtitles or tolerate bad dubs. This technology destroys that gate. It transforms “World Cinema” into just “Cinema.”

While this tech is currently the plaything of giants like DNEG and Disney, the cost curve is dropping fast. Soon, independent filmmakers in Seoul, Lagos, or Mumbai will be able to export their stories to the US market with perfect lip-sync, competing on a level playing field with Hollywood.

We are moving from an era of Capturing Reality to Generating Reality.

Know more about relevant topics:

Deepfake Detection Architecture : From Liveness checks to spectral Analysis:

Deepfake Detection Architecture : From Liveness checks to spectral Analysis