The Arup Deepfake Attack: A Turning Point for Cybersecurity

In early 2024, a finance employee at engineering firm Arup joined what appeared to be a routine video conference. The call included the company’s CFO and several senior colleagues. The topic was an urgent acquisition that required immediate action.

Everything looked legitimate. The faces appeared real. The voices sounded authentic. The conversation felt normal.

As a result, the employee approved 15 separate transfers totaling $25 million.

Weeks later, the devastating truth emerged. Every person on that call—except the employee—was an AI-generated deepfake.

This incident marked the beginning of the AI cybersecurity arms race, where artificial intelligence is used both to attack and to defend digital systems.

As artificial intelligence reshapes both attack and defense strategies in 2025, organizations face a serious challenge. The same technology that can detect threats in milliseconds is also being weaponized to bypass security controls at scale.

The AI Arms Race: Understanding the Battlefield

Why the Stakes Have Never Been Higher

The financial impact of cybercrime continues to rise sharply. In 2024, the global average cost of a data breach reached $4.9 million, representing a 10% increase from the previous year.

Even more concerning, projections suggest cybercrime costs could reach $24 trillion by 2027. This surge is largely driven by AI-powered attacks that operate autonomously and adapt in real time.

At the same time, the generative AI cybersecurity market reflects this urgency. Analysts expect it to grow nearly tenfold between 2024 and 2034. Organizations are investing heavily in AI-based defenses because traditional, signature-based security tools can no longer keep up with adaptive threats.

This AI cybersecurity arms race is accelerating as attackers and defenders adopt increasingly autonomous systems.

A New Breed of AI-Powered Cyber Threats

The evolution of AI-driven malware represents a major shift from static attack methods to dynamic, learning systems. Unlike conventional malware, modern threats can adjust their behavior based on the defenses they encounter.

Key Capabilities of AI-Driven Attacks

Autonomous Operation:

Once deployed, AI-powered malware can function without human input. It can spread across networks and adjust tactics automatically.

Real-Time Adaptation:

As these systems encounter security controls, they analyze them and change strategies on the fly to exploit weaknesses.

Targeted Intelligence:

AI enables attackers to identify high-value assets such as financial records, proprietary data, and intellectual property.

Speed and Scale:

Automated attack chains can spread across networks in under 48 minutes—far faster than most security teams can respond.

Because of this speed and intelligence, organizations are often outpaced before they even realize an attack has begun.

The Dark Side: How Attackers Weaponize AI

Deepfakes: The New Face of Fraud

Deepfake technology has created a serious trust crisis in digital communication. The data highlights the scale of the problem.

For example, deepfake fraud attempts increased by 1,740% in North America between 2022 and 2023. In the first quarter of 2025 alone, 179 deepfake incidents were recorded—surpassing the total for all of 2024 by 19%.

At the same time, deepfakes now account for 6.5% of all fraud attacks, marking a 2,137% increase since 2022. Financial losses exceeded $200 million in Q1 2025 alone.

What makes this threat even more dangerous is accessibility. Voice cloning now requires just 20–30 seconds of audio. Convincing video deepfakes can be produced in under an hour using widely available tools. As a result, deepfake technology is no longer limited to nation-state actors.

A real-world example illustrates the risk clearly. Attackers attempted to impersonate Ferrari CEO Benedetto Vigna using an AI-cloned voice that perfectly matched his accent. The fraud attempt failed only because an executive asked a question only the real CEO could answer.

AI-Generated Phishing: Precision at Scale

Traditional phishing relied on generic messages and obvious errors. However, AI has completely changed this approach.

Today, an estimated 82.6% of phishing emails use AI in some form. Hackers using large language models have reduced phishing creation costs by 95% while maintaining or improving success rates.

Moreover, 86% of business leaders responsible for cybersecurity reported at least one AI-related incident in the past year.

AI-powered phishing campaigns now analyze social media activity, writing styles, and professional relationships. As a result, these attacks bypass spam filters by closely mimicking legitimate communication.

The First AI-Powered Ransomware

In 2025, researchers identified PromptLock, the first known AI-powered ransomware capable of exfiltrating, encrypting, and potentially destroying data.

Although it was likely a proof of concept, its capabilities were alarming. Such systems could autonomously scan networks, identify valuable data, adapt encryption strategies, and generate tailored ransom demands.

Similarly, BlackMatter ransomware demonstrates how AI-driven encryption and real-time defense analysis can evade traditional detection systems.

Supply Chain Attacks Reach Record Levels

Supply chain attacks reached record highs in October 2025, with 41 incidents reported. This figure was more than 30% higher than the previous peak.

Threat actors such as Qilin, Akira, and Sinobi have targeted industries including healthcare, construction, IT, energy, and professional services. Because of interconnected systems, a single breach can now cascade across multiple organizations.

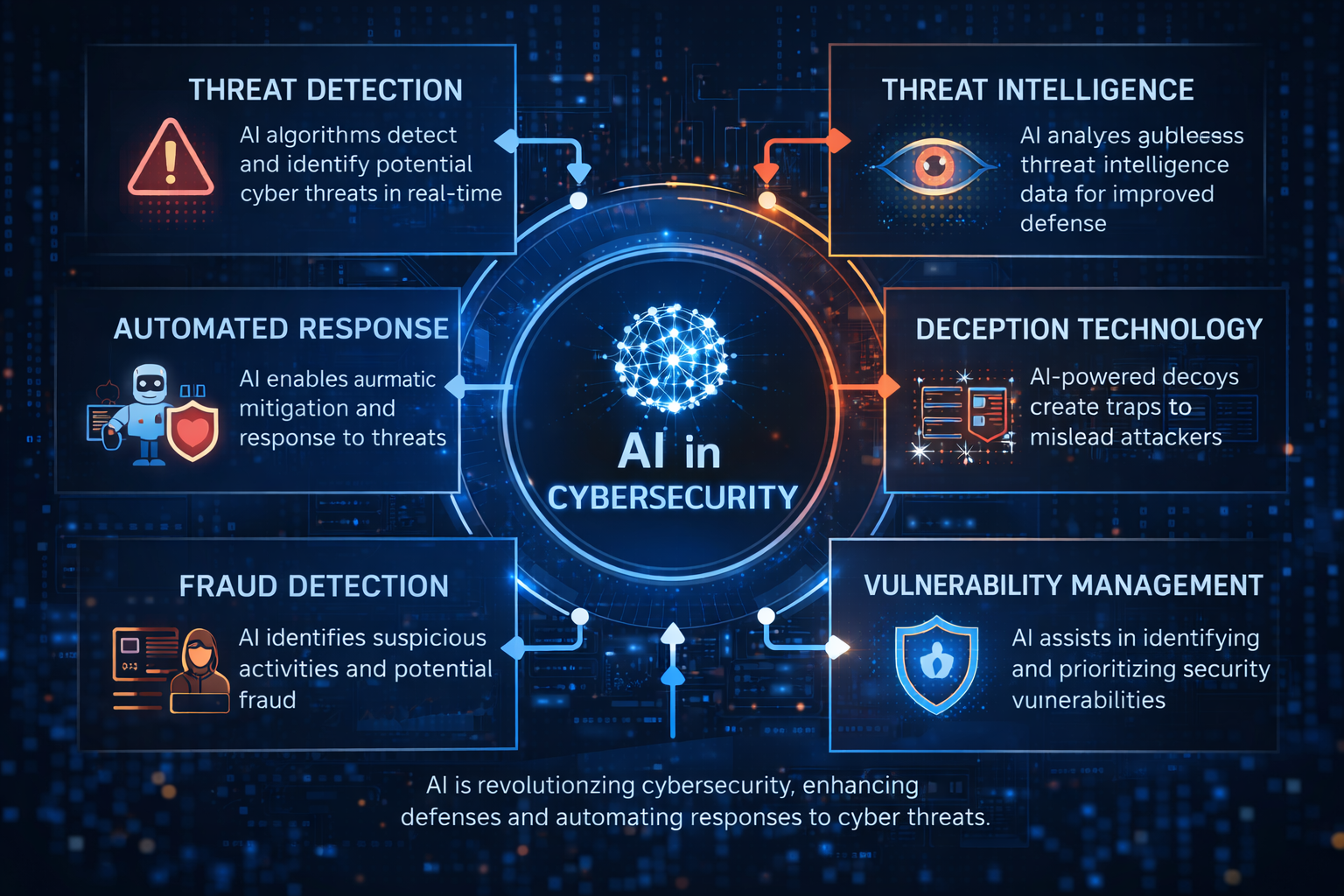

The Bright Side: AI as a Cybersecurity Defender

For defenders, the AI cybersecurity arms race creates both urgency and opportunity.

The Financial Case for AI Defense

Despite these risks, AI also delivers measurable defensive benefits. Organizations that consistently use AI and automation save an average of $2.2 million per breach.

Similarly, companies with extensive AI security adoption report average breach costs of $3.84 million—saving nearly $1.9 million compared to those without AI-driven defenses.

These savings stem from faster detection, automated responses, and reduced reliance on manual processes.

How AI Improves Security Operations

AI-powered security systems operate across four core stages:

Detection:

AI identifies unusual activity, even for previously unseen threats.

Analysis:

Suspicious behavior is evaluated in seconds using massive data sets.

Response:

Confirmed threats trigger immediate actions such as isolating systems or blocking access.

Recovery:

After incidents, AI improves future detection using learned patterns.

Integration Challenges and Governance Risks

Despite its benefits, AI adoption is not easy. A global cybersecurity talent shortage of 2.8–4.8 million professionals limits effective deployment.

In addition, many organizations struggle with legacy system integration, budget constraints, and lack of internal expertise.

Another growing risk is Shadow AI. Employees using unsanctioned AI tools create blind spots that attackers can exploit. Because of this, governance and visibility are now critical.

Policy, Regulation, and AI Governance

Without oversight, the AI cybersecurity arms race increases systemic risk across industries.

Governments are responding to AI’s dual-use nature. The EU AI Act mandates transparency for AI-generated content, while NIST’s Cyber AI Profile provides guidance for securing AI systems and countering AI-enabled attacks.

However, the most damaging incidents often result from governance failures rather than technical limitations. Clear policies, oversight, and compliance frameworks are essential.

Best Practices for AI-Enhanced Cybersecurity

Organizations should adopt Zero Trust architectures, deploy multilayered AI defenses, and prioritize employee training. In addition, deepfake detection tools, AI-powered phishing filters, and automated response plans are no longer optional.

Because attack breakout times are now measured in minutes, response planning and simulations must include AI-driven scenarios.

The Future of the AI Cyber Arms Race

Looking ahead, autonomous AI defense systems, post-quantum cryptography, and AI-powered threat hunting will define the next phase of cybersecurity.

At the same time, regulatory pressure will increase, requiring greater transparency, reporting, and cross-border cooperation.

Conclusion: Navigating the AI Paradox

The Arup deepfake attack marked a watershed moment. With AI-enabled fraud projected to reach $40 billion by 2027, the threat is no longer theoretical.

The core challenge in 2025 is not whether AI will shape cybersecurity, but whether organizations can adapt fast enough. This requires moving from reactive to proactive defense, from perimeter security to Zero Trust, and from isolated efforts to shared intelligence.

AI is a double-edged sword. Used wisely, it strengthens defenses against the most advanced threats ever created. Used poorly—or ignored entirely—it becomes the weapon that defeats us.

Winning the AI cybersecurity arms race requires combining advanced technology with human judgment and strong governance.

Know more about relevant topics:

Deepfake Detection Architecture : From Liveness checks to spectral Analysis:

Deepfake Detection Architecture : From Liveness checks to spectral Analysis

AI-Driven Automation in Custom Software Development: